Highlighting work orders or service requests with color based on their priority and due date is a common requirement. While it is easy to implement this in the applications, it is harder to achieve with Start Center result sets as the functionality is quite limited.

I have seen this question pop up a few times in various forums but there hasn’t been a detailed instruction posted on the Internet. Hopefully, this post can provide enough details for the non-technical consultants to implement this for their clients.

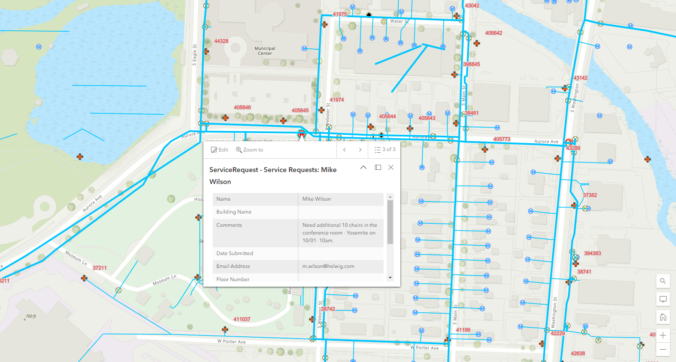

I will demonstrate the steps to implement this with a simple requirement. A customer wants to display a list of Service Requests and highlight them in color as follows:

- Priority 1 – Urgent: if the age of the SR is less than 30 minutes, display it as Green. If the age is less than 2 hours, display it as Orange. And if the age is higher than 2 hours, display it as Red.

- Priority 2 – High: Green for less than 2 hours, Orange for less than 8 hours, and Red for more than 8 hours

- Medium/Low: Green for less than 1 day, Orange for less than 3 days, and Red for more than 3 days

The functionality around color coding for Start Center Result Set is quite limited:

- The expression is limited to a few simple operators such as Less Than, Equal, and Greater Than. And there is no option to use Conditional Expression.

- The condition must be based on a field displayed on the result set

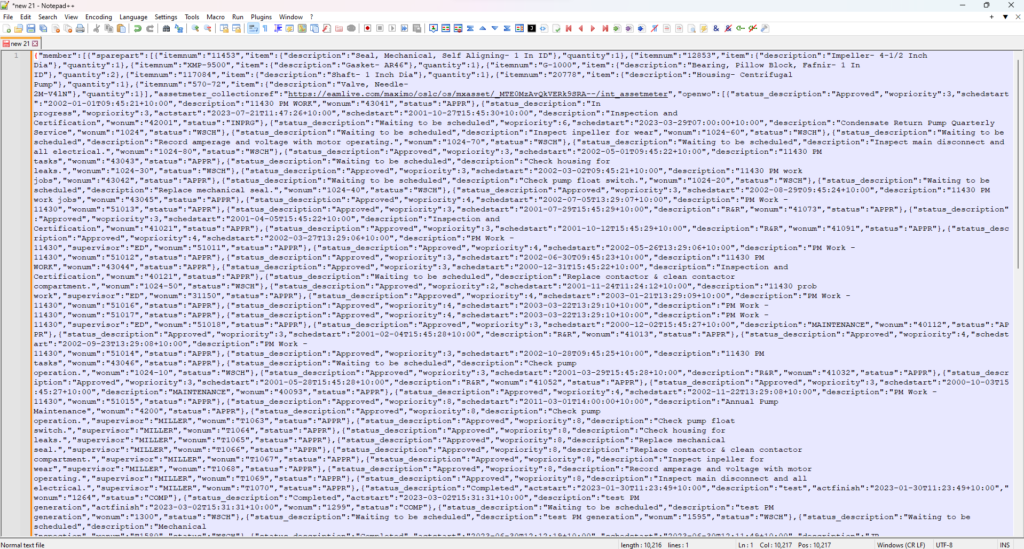

To workaround this limitation, we can use a non-persistent field, for which, we can use Formula or write an automation script to initialize a value based on a complex logic. The steps are as follows:

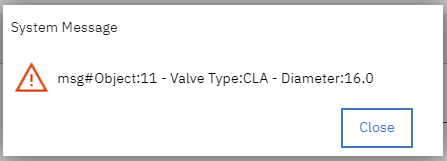

Step 1: Use the Database Configuration app, add a new field to the SR object, then Run Apply Configuration Changes

- Attribute Name: COLORCODE

- Data Type: Integer

- Persistent?: Unchecked

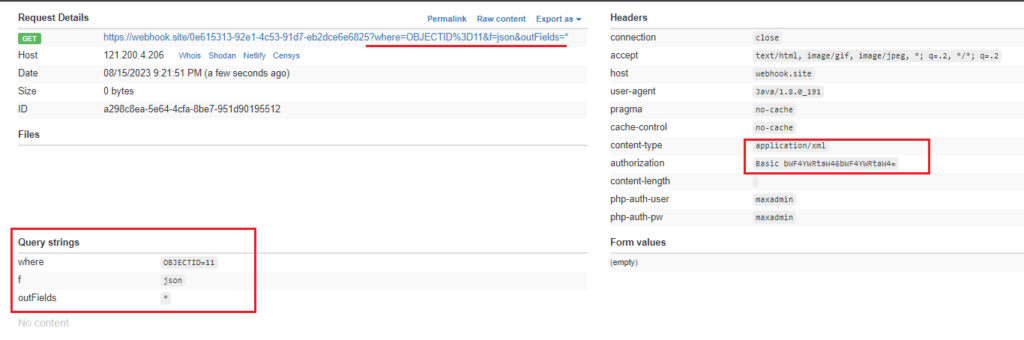

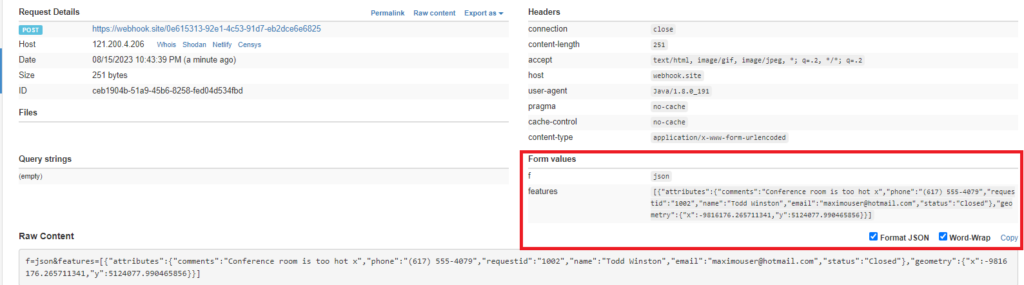

Step 2: Use the Object Structures app, create a new object structure:

- Object Structure: RPSR

- Consumed By: REPORTING

- Source Objects: add the SR

- Use Exclude/Include Fields action: include the COLORCODE non-persistent field.

Step 3: Use the Automation Scripts app, create a new Script with Attribute Launch Point:

- Launch Point: COLORCODE

- Object: SR

- Attribute: COLORCODE

- Events: Initialize Value

- Script Name: SR_COLORCODE_INIT

- Language: Python

- Source:

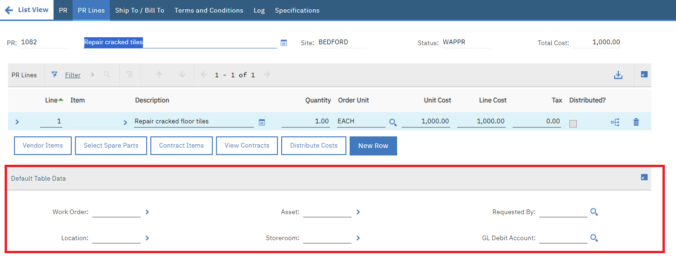

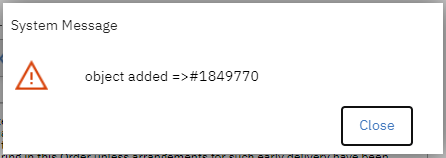

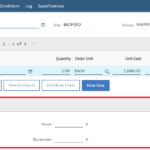

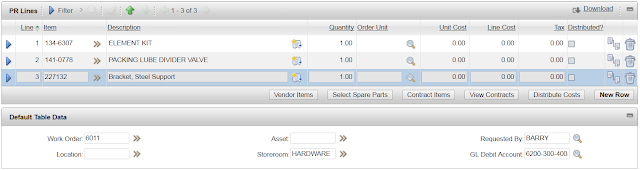

Step 4: Edit the Start Center template, add a Result Set to the Start Center, then edit it:

- Application: SR

- Query: All Service Requests (or select any Saved Query you want to use)

- In the Object List, ensure to select Object Structure created in the previous step

- Add the fields you want to display. In this case, I added Service Request, Summary, Reported By, Reported Date, Reported Priority, Color Code. Note that the new non-persistent field Color Code must be added

- In the Color Options tab, set up the 3 color options as depicted in the image below.

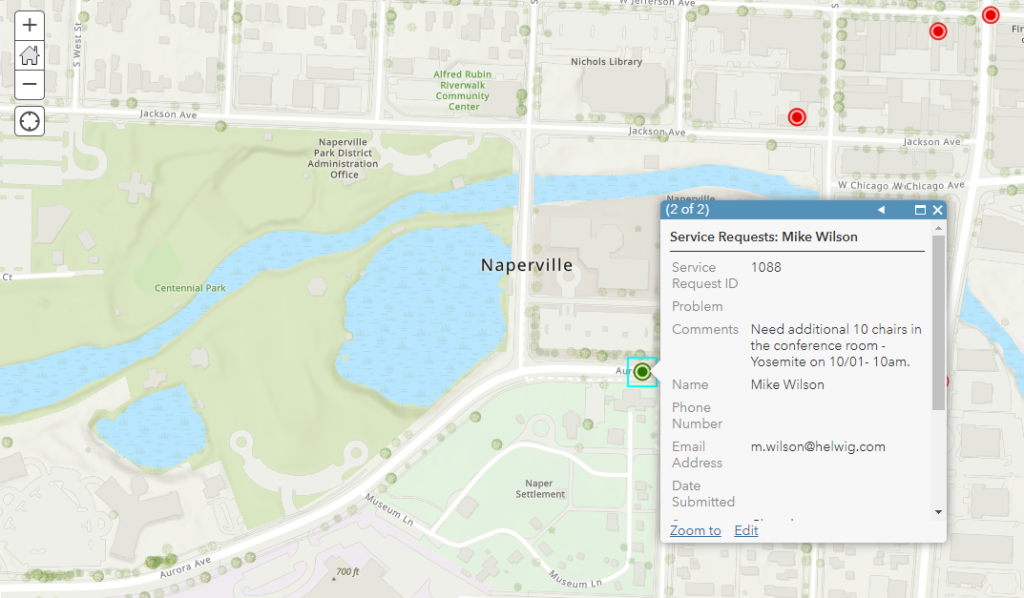

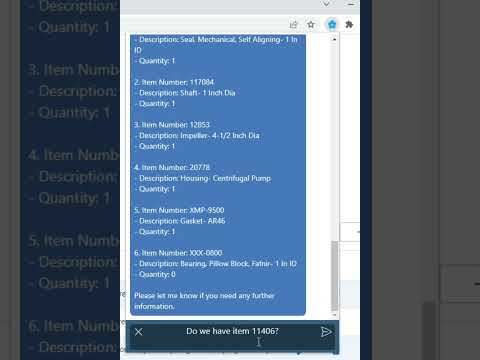

If everything is set up correctly, the Start Center Result Set should display values in the Color Code column, and the records should be highlighted in the right colors

Troubleshooting:

- If the Color Code field does not display a value, need to check the automation script which is responsible for initializing the value for the field

- When adding fields to the Result Set, if the Color Code field is not there, it is because the custom object structure is not selected. By default, the Result Set will always have a “Service Requests” item in the object list, even if there is no Object Structure of type REPORTING created. If you have multiple object structures for the SR object, you can give the object structure a specific description to easily identify it.

- After deploying the Start Center to production, there could be various issues that prevent it from displaying properly, please refer to my previous post for more details on how to troubleshoot it.